In a previous blog I discussed how to detect DoS attacks in the game Garry’s Mod. TL;DR: Measure the time it takes the server to process a clients requests. If it takes longer (compared to other players) than normal, we ban the player.

But what if it doesn’t get that far? What if the process terminates before finishing the calculation?

Recently, some server owners have contacted me because they are struggling with attackers who cause their servers to crash immediately. It took me a good while to analyze what the cause of these crashes were.

Background

For a detailed description of the networking library in Garry’s Mod please refer to the documentation.

Basically, a server has a set of predefined network functions (“net messages”). Most addons implement some of them to enable communication between the server and client (bidirectional).

To give you a simple idea of the concept, here is a primitive example:

Server:

-- The server has to register each net message before it can be used

util.AddNetworkString("AnyIdentifierForThisMessage")

-- The server listens for the message and runs the function when it is received

net.Receive("AnyIdentifierForThisMessage", function(len, ply)

local data = net.ReadString()

print(string.format("Received data: %s", data))

end)Client:

-- The client sends the message to the server

net.Start("AnyIdentifierForThisMessage")

net.WriteString("Hello, world!")

net.SendToServer()Attack vector

The networking library is build upon the implementation of the Source Engine and inherits all limitations. The size of all messages is limited to 65,533 bytes. To send larger payloads we have the following options, among others:

- Chunk payload into multiple messages (takes more effort to implement)

- Use HTTP (usually requires third-party or selfhosting)

- Compress the payload

We stick to the third option using our previous example:

Server:

-- The server has to register each net message before it can be used

util.AddNetworkString("ThisWillCauseTrouble")

-- The server listens for the message and runs the function when it is received

net.Receive("ThisWillCauseTrouble", function(len, ply)

-- Size of the compressed payload we expect

local size = net.ReadUInt(32)

local compressed_data = net.ReadData(size)

local decompressed_data = util.Decompress(compressed_data)

-- Further processing of the data

end)Client:

-- The client sends the message to the server

local payload = "ABCD..."

local compressed = util.Compress(payload)

net.Start("ThisWillCauseTrouble")

net.WriteUInt(#compressed, 32)

net.WriteData(compressed, #compressed)

net.SendToServer()This implementation is trivial and easy to understand.

util.Decompress uses the Lempel–Ziv–Markov chain algorithm (LZMA) under the hood. LZMA2 to be precise. A fast and lossless compression algorithm.

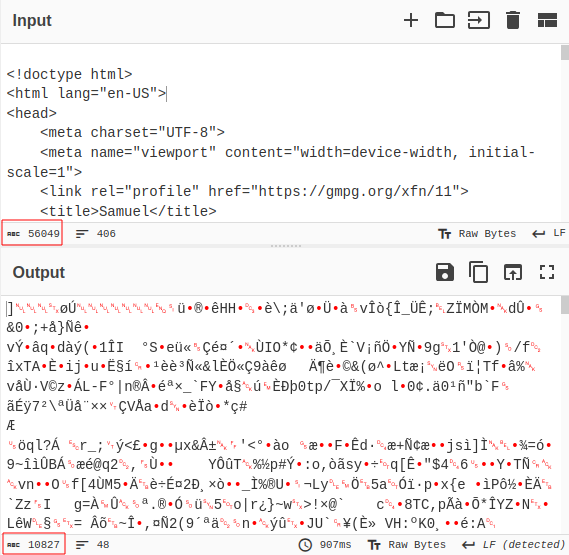

When we put the page source of this website into CyberChef, we get a compression ratio of \(56049/10827 \approx 5,2\):

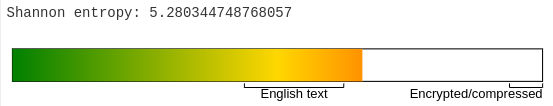

Additionally the entropy of the page source is about \(5,3\).

When we supply data with less entropy (e.g. JSON or log files) we can roughly expect a compression ratio of about 30:1. This ratio will vary highly.

If we supply 1 MB of lowercase ‘a’ we receive a compressed size of \(1,499\) bytes. A ratio of \(1,000,000/1499 \approx 667,1\).

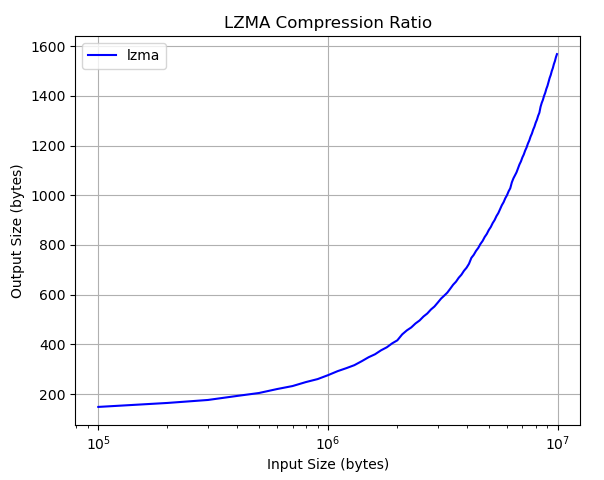

To get a visual understanding we can plot the compression ration using a small Python script with matplotlib:

One does not need a PhD to recognize, that the compression of repeating input does scale quite well. But what is the maximum input size we can supply to not exceed the mentioned payload limit of 65,533 bytes?

We could analyze the LZMA implementation and precisely calculate this limit. To take a shortcut we will use a simple polynomial regression. Given the potential implications of my novel Python implementation for national security, I have chosen not to include it here.

449,650,947 bytes of input data will shrink down to exactly 65,533 bytes. The ratio is about 6800:1.

At this point we should see where this is going…

Like with the classical Zip bomb, a client can cause the server to crash using highly compressed data:

-- The client sends the message to the server

local hugeData = string.rep("A", 400000000)

local compressed = util.Compress(payload)

net.Start("ThisWillCauseTrouble")

net.WriteUInt(#compressed, 32)

net.WriteData(compressed, #compressed

net.SendToServer()This will take the server a few seconds to process and create a unusually large memory allocation. How the server reacts in detail depends on the implementation of util.Decompress:

- If the data is being discarded after decompression, the server might, at most, timeout for a few seconds. The garbage collection will then free the memory.

- Often the decompressed data is being passed into

util.JSONToTable. If the payload is valid JSON, this will cause the server to either crash or timeout. - Even worse, the data might get validated, compressed and broadcasted to all clients connected to the server.

EDIT: My assumption with util.JSONToTable was correct. See the Lua stack trace I received by a server owner.

Lua Stack:

[C][+0] [C] in JSONToTable

[L][+1] addons/mc_quests/lua/mqs/core/sh_util.lua:27 in field TableDecompress Line 25 -> 30

[L][+2] addons/mc_quests/lua/mqs/core/sv_init.lua:30 in anonymous function Line 25 -> 37

[C][+3] [C] in pcall

[L][+4] lua/nova/modules/networking/netmessages.lua:33 in anonymous function Line 13 -> 43

[C][+5] [C] in xpcall

[L][+6] [string "__phys_aa_caller.lua"]:7 in anonymous function Line 6 -> 8This attack is used in the wild to crash game servers and even blackmail the owners on a large scale. Additionally it is hard to detect as the net.Receive often does not even get to finish processing.

Detection

Now the challenge is to implement a working solution that prevents the server from crashing and bans the responsible client. Also, it must not produce false-positives. It is far too computationally expensive and complex to analyze the content of the compressed message in real time. We must find another solution.

This implementation is part of my Security Solution for Garry’s Mod servers: Nova Defender

Decompression Size Limit

When reading the documentation for util.Decompress we find a second parameter maxSize.

string or nil util.Decompress( string compressedString, number maxSize = nil )The decompression will abort, if this limit is reached and return nil. As nearly no developer sets this limit, we can enforce it by default by overriding the function:

local maxDecompressedSize = 100000000 // 100 MB

local oldDecompress = util.Decompress

function util.Decompress(compressed, limit, ...)

// Limit is already set

if limit and limit > 0 then

return oldDecompress(compressed, limit, ...)

end

// Call the actual decompress function

local decompressed = oldDecompress(compressed, maxDecompressedSize, ...)

if decompressed == nil then

ErrorNoHalt("util.Decompress blocked by Nova Defender to prevent server crash.\n")

end

return decomposed

end

This also has the advantage, that the decompressed data will not be allocated in our Lua environment.

Compression Ratio

By a simple division we can calculate the compression ratio after the decompression was performed. If the decompression was aborted, we can calculate with the maxDecompressedSize giving us a lower bound.

local maxDecompressedSize = 100000000 // 100 MB

local minDecompressedSize = 10000000 // 10 MB

local oldDecompress = util.Decompress

function util.Decompress(compressed, limit, ...)

local compressedSize = #compressed

// Limit is already set

if limit and limit > 0 then

return oldDecompress(compressed, limit, ...)

end

// Call the actual decompress function

local decompressed = oldDecompress(compressed, maxDecompressedSize, ...)

if decompressed == nil then

ErrorNoHalt("util.Decompress blocked by Nova Defender to prevent server crash.\n")

end

local decompressedSize = decompressed and #decompressed or maxDecompressedSize

local ratio = math.Round(decompressedSize / compressedSize, 2)

// Check for compression ratio

if decompressedSize > minDecompressedSize and ratio > maxCompressionRatio then

// Do something againt the client

end

return decomposed

end

Edge Cases

We have to consider multiple edge cases:

- We have to ensure that

util.Decompresswas called from inside anet.Receive - The supplied compressed data must also originate from the client. We can achieve this by checking, if the payload size form the

net.Receiveis larger or equal tocompressed. - Implement a whitelisting system as some addons are poorly written and we can’t guarantee compatibility with all of them.

Using both techniques in combination we can create a powerful detection for must cases.